The RAIR Lab comprises for example the RPI component of a collaboration with Brown University and Tufts University to explore moral reasoning in robots, funded by the Office of Naval Research. Our role in this MURI (Multi University Research Initiative) is primarily to focus on the higher-level reasoning processes that can ensure proper* moral reasoning in real-world situations. For instance, if a robot has an obligation to help all injured soldiers, and finds itself in a situation where there are two injured soldiers but only one health pack, what should it do? One thing is clear regarding this dilemma: it should not freeze up and end up helping neither soldier (as in the Buridan’s donkey scenario). As it turns out, preventing robots from making mistakes like these requires, at a minimum, a carefully designed framework for knowledge at a high level of expressivity, and tools for carrying out such high-level reasoning.

This page provides overviews of our research thus far in this direction:

- Cognitive Modeling, Psychometrics, and Simulation with PAGI World

- Moral Reasoning, Decision Making, and Moral Dilemmas with Cognitive Calculi

- NLU/NLG for Moral Robots

- Autonomy and Moral Robots/Machines

- Opinion

- Downloads

- Publications and Presentations

Cognitive Modeling, Psychometrics, and Simulation with PAGI World

In order to demonstrate many of the moral reasoning tasks we will need to solve for this MURI, we have developed the simulation environment PAGI World (PAGI stands for Psychometric Artificial General Intelligence). PAGI World is designed in Unity 2D, so it can be run on all major operating systems (including iPhone and Android!). The AI agent is controlled by an external script that communicates with PAGI World through TCP/IP, meaning that it can be written in virtually any programming language. With its ever-growing set of tasks testing various aspects of human-level intelligence, PAGI World is uniquely positioned to serve as a way for cognitive systems to show that their particular approach to intelligence is better than the others (and tasks in PAGI World can be made available to other researchers to compare their systems).

For more information on PAGI World, click here.

One important component of our approach to modeling moral cognition is its grounding in Psychometric AI, the discipline that uses tests of human intelligence as a guide for measuring progress in artificial intelligence. Likewise, Psychometric AGI (the ‘G’ stands for general) ties progress in artificial general intelligence to tasks measuring general human intelligence—the name of PAGI World reflects our adherence to this approach.

Many of the videos and images on this page are of tasks in PAGI World. We are currently preparing a journal submission to JAIR.

Moral Reasoning, Decision Making, and Moral Dilemmas with Cognitive Calculi

Moral Dilemmas

Naïve approaches to reasoning cannot hope to avoid falling victim to classical moral dilemmas. For example, a recent demonstration by Alan Winfield, Christian Blum, and Wenguo Liu showed that an “ethical” robot programmed with a very minimalistic morality can be put in a situation where it has to decide between saving two humans, become paralyzed with indecision, and end up letting both of them die.

Obviously, this is unacceptable. We created a similar demonstration in PAGI World, where a robot who is obligated to give health packs to injured soldiers is faced with the dilemma of having two injured soldiers and only one health pack. We show that using an encoding of obligations in, and reasoning over, the DCEC*, we can have our robot recognize the dilemma, and first try to look for a creative solution. If he can’t find one, he will force himself to make a choice. If he can find one (such as dividing the medkit in half), and that creative solution sufficiently satisfies his obligations, he will take it. This is demonstrated in the following video:

Cognitive Calculi: DCEC*, DCSC*, …

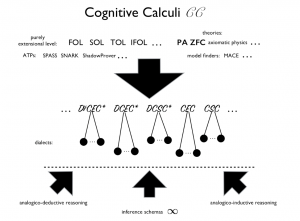

The moral reasoning in our models is made possible with the use of a hierarchy of Cognitive Calculi (CC), a group of formalisms including the Dynamic Cognitive Event Calculus (DyCEC*), the Deontic Cognitive Event Calculus (DCEC*, or DeCEC* to avoid confusion with DyCEC*), the Deontic Cognitive Situation Calculus (DCSC*), and so on.

We have developed several tools for working with the DCEC*. Two reasoners have been developed (ShadowProver and Talos), along with a parser that can convert a subset of statements in English to formulae in the DCEC*. See the links section below for more info.

Self-Awareness in Robots

The RAIR Lab has been long exploring the topic of how expressive a knowledge representation must be before it can perform the sorts of tasks associated with self-awareness. Some of our previous work involved the passing of the mirror test by a robot we called Cogito:

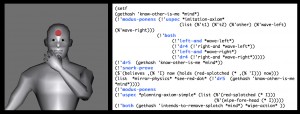

More recently, in response to a challenge posed by philosopher Luciano Floridi, we showed that a relatively simple reasoning process (where the reasoning was carried out in our DCEC* formalism) can pass another test of self-consciousness. The task simply involves three robots: two are given “dumbing” pills (which renders them unable to speak), and one is given a placebo. All three robots (who are previously made aware of the rules of this task) are asked whether they received the dumbing pills or not. The one who was given the placebo might say “I don’t know,” and upon realizing that he just spoke, reason that he therefore must have been given the placebo. A demonstration of this in action is in the following video:

NLU/NLG for Moral Robots

Relevance Parsing

In addition to the tools we have been developing for parsing of statements in natural language (NL), we have been addressing problems related to deeper semantic parsing. The problem of relevance is well known to experts in many fields: computational linguistics, analogy, and legal reasoning, just to name a few. If a commander were to say the following to a robot:

“If you need the med kit, it will be at location bravo.”

We would want the robot to commit the knowledge “the med kit is at location bravo” to memory. However, at first glance, the original statement was phrased in the form of a material conditional, meaning that a simple approach might instead represent this knowledge in an if-then form that was not originally intended. This is a problem, because the robot’s knowledge of the med kit’s location should not be contingent on whether or not he needs it!

Our approach to solving this problem can be seen in the following videos, and in the publications section below.

Converting Natural Language commands into actions

Given a natural language command to perform a series of actions, this parser captures the semantics of the actions and converts it into a series of procedures required to successfully accomplish the goal of the actions intended in a given simulation environment.

Intelligent Agent Development Using Unstructured Text Corpora and Multiple Choice Questions

An upcoming dissertation from Joe Johnson is as follows:

Abstract: This dissertation explores an approach for programmatic knowledge-base development and verification using an intelligent agent that takes a multiple-choice test using said knowledge base. A knowledge-base is the basis upon which knowledge-representation and reasoning systems are built, and are thus key to the success and effectiveness of such systems. Often, however, knowledge-bases must be developed manually, hand-sewn by knowledge engineers and subject-matter experts. Here, we explore programmatic knowledge-base development by applying computational semantics and natural-language processing techniques to multiple-choice questions about the problem domain, in conjunction with the traditional methods for entity recognition and relation extraction. The purpose of this work is threefold: First, establish an approach for efficient knowledge-base development. This is accomplished by demonstrating this approach on an example domain: fraud detection. Second, propose and explore a new means of verifying the knowledge base — one which measures the performance of an agent that takes a multiple-choice exam using the knowledge-base as a core component, and which provides justifications for its answers. And third, lay a foundation for the rigorous science of fraud detection.

Autonomy and Moral Robots/Machines

There are countless moral issues related to robots who find themselves in situations where they must autonomously behave in a moral way. Using the technologies described on this page, we can tackle some of the issues involved, such as the problems of akrasia/subjunctive reasoning, and the limitations of naïve logics that lead to the Lottery Paradox:

Akrasia and Subjunctive Reasoning

Imagine that a robot were to take an enemy soldier prisoner. We would want such a robot to not hurt the prisoner, even though it might want to. But reaching the conclusion that it must not hurt the prisoner is no trivial task. It requires two abilities: (1) the ability to detect an instance of akrasia (informally, a weakness of will leading to behavior that is against one’s better judgment); and (2) an ability to reason counterfactually, specifically, through subjunctives (in this case: “If I had hurt this prisoner than I would have been committing an akratic action.”)

Both abilities are carried out by first providing a formal definition of akrasia in the DCEC*, and then showing how subjunctive reasoning can be performed in the DCEC*. The result is demonstrated in the following video. For more information, see the Akrasia publications and presentations.

The Lottery Paradox

“Rational” reasoning is not as easy to define and expect a robot to do autonomously as you might think! For example, many approaches to reasoning fall victim to paradoxes like the Lottery Paradox, in which it can be shown that under reasonable assumptions about the nature of a lottery with prize money involved, a contradiction in believes can be proven; that is, there is a proposition p that a reasonable person believes and doesn’t believe at the same time. We have shown that a solution to this apparent paradox exists in the DCEC* that is superior to the current proposed solutions, and are working on a submission we expect to publish soon.

Opinion

The following are relevant opinion pieces produced by lab members.

- Bringsjord, S. Ethical AI Could Have Thwarted Deadly Crash. Times Union. April 5, 2015.

- Paper (pdf)

- Bringsjord, S. Can Humans Build Robots That Will Be More Moral Than Humans? The Science and Big Questions Hub. 2015.

Downloads/Links

- PAGI World (including links to source code)

- DCEC*-related downloads, including links to:

- Talos (DCEC* Prover)

- ShadowProver (Older DCEC* prover used for Akrasia demo)

- GF-Based Parser: A real-time parser based on the Grammatical Framework that converts Controlled English sentences into DCEC* formulae

- Main page for MURI

- RAIR Lab main page

Publications and Presentations

A selected list of the RAIR Lab’s publications related to these projects, along with slides for presentations given, are as follows:

- Bringsjord, S. A 21st-Century Ethical Hierarchy for Robots and Persons: EH. Under Review.

- Bringsjord, S. & Govindarajulu, N.S. Ethical Regulation of Robots Must be Embedded in Their Operating Systems. In Trapp, R. (ed), A Construction Manual for Robots’ Ethical Systems: Requirements, Methods, Implementations. Springer. Cham, Switzerland. Forthcoming.

- Paper (pdf)

- Licato, J., Atkin, K., Cusick, J., Marton, N., Bringsjord, S., & Sun, R. PAGI World: A Physically Realistic Simulation Environment for Developmental AI Systems. In Progress.

- Licato, J., Marton, M., Dong, B., Sun, R., & Bringsjord, S. Modeling the Creation and Development of Cause-Effect Pairs for Explanation Generation in a Cognitive Architecture. In Progress.

- Atkin, K., Licato, J., & Bringsjord, S. Modeling Interoperability Between a Reflex and Reasoning System in a Physical Simulation Environment. In Proceedings of the 2015 Spring Simulation Multi-Conference. Arlington, VA. 2015.

- Marton, N., Licato, J., & Bringsjord, S. Creating and Reasoning Over Scene Descriptions in a Physically Realistic Simulation. In Proceedings of the 2015 Spring Simulation Multi-Conference. Arlington, VA. 2015.

- For the broadest overview in print of the full context of cognitive calculi used in the RAIR Lab’s mechanization of moral reasoning and decision-making, see the following paper: Bringsjord, S., Govindarajulu, N.S., Licato, J., Sen, A., Johnson, J., Bringsjord, A., & Taylor, J. On Logicist Agent-Based Economics. in Proceedings of Artificial Economics 2015 (AE 2015). University of Porto, Porto, Portugal. 2015.

- Paper (pdf)

- Bello, P., Licato, J., & Bringsjord, S. Constraints on Freely Chosen Action for Moral Robots: Consciousness and Control. Proceedings of RO-MAN 2015. Kobe, Japan. 2015.

- Paper (pdf)

- Bringsjord, S. Moral Competence in Computational Architectures for Robots: Foundations, Implementations, and Demonstrations. 2015.

- Bringsjord, S., Licato, J., Govindarajulu, N.S., Ghosh, R., & Sen, A. Real Robots that Pass Human Tests of Self-Consciousness. Proceedings of RO-MAN 2015. Kobe, Japan. 2015.

- Paper (pdf)

- Arista, D., Peveler, M., Ghosh, R., Bringsjord, S., & Bello, P. Employing Cognitive Calculus for Determinations of Relevance in NLU. Proceedings of MBR 2015. July, 2015.

- Slides (ppt)

- Bringsjord, S., “Well, Zombie Autonomy is Fearsome.” CEPE–IACAP 2015 Joint Conference. University of Delaware. June 22, 2015.

- Bringsjord, S. & Licato, J. By Disanalogy, Cyberwarfare is Utterly New. Philosophy and Technology, pp. 1-20. Springer. April, 2015.

- Licato, J. & Bringsjord, S. PAGI World: A Simulation Environment to Challenge Cognitive Architectures. Presentation given for the IBM Cognitive Systems Institute Series. Feb., 2015.

- Bringsjord, S., Govindarajulu, N.S., Thero, D., & Si, M. Akratic Robots and the Computational Logic Thereof. Proceedings of the IEEE International Symposium on Ethics in Science, Technology, and Engineering (IEEE Ethics 2014). Chicago, IL. 2014.

- Paper (pdf)

- Bringsjord, S. & Licato, J. Morally Competent Robots: Progress on the Logic Thereof. Presentation given for MURI Review. Dec. 2014.

- Slides (pdf)

- Slides (zipped keynote file)

- Supplemental files

- Bringsjord, S. & Licato, J. Morally Competent Robots: Progress on the Logic Thereof. Presentation given for MURI Review. Aug. 2014.

- Slides (pdf)

- Supplemental files

- Licato, J., Sun, R., & Bringsjord, S. Structural Representation and Reasoning in a Hybrid Cognitive Architecture. In Proceedings of the 2014 International Joint Conference on Neural Networks (IJCNN). Beijing, China. 2014.

- Licato, J., Sun, R., & Bringsjord, S. Using a Hybrid Cognitive Architecture to Model Children’s Errors in an Analogy Task. In Proceedings of CogSci 2014. Quebec City, Canada. 2014.

- Licato, J., Sun, R., & Bringsjord, S. Using Meta-Cognition for Regulating Explanatory Quality Through a Cognitive Architecture. In Proceedings of the 2nd International Workshop on Artificial Intelligence and Cognition. Turin, Italy. 2014.

- Bringsjord, S., & Govindarajulu, N.S. Robot-Ethics Background for OFAI Position Paper (“Engineer at the Level of the OS!”). OFAI. Vienna, Austria. September, 2013.

Questions?

This page was compiled and written by John Licato, now an assistant professor at Indiana/Purdue-Fort Wayne. For more information on the research presented here, please contact Selmer Bringsjord, John Licato, or any of the other RAIR Lab affiliated graduate students and faculty.